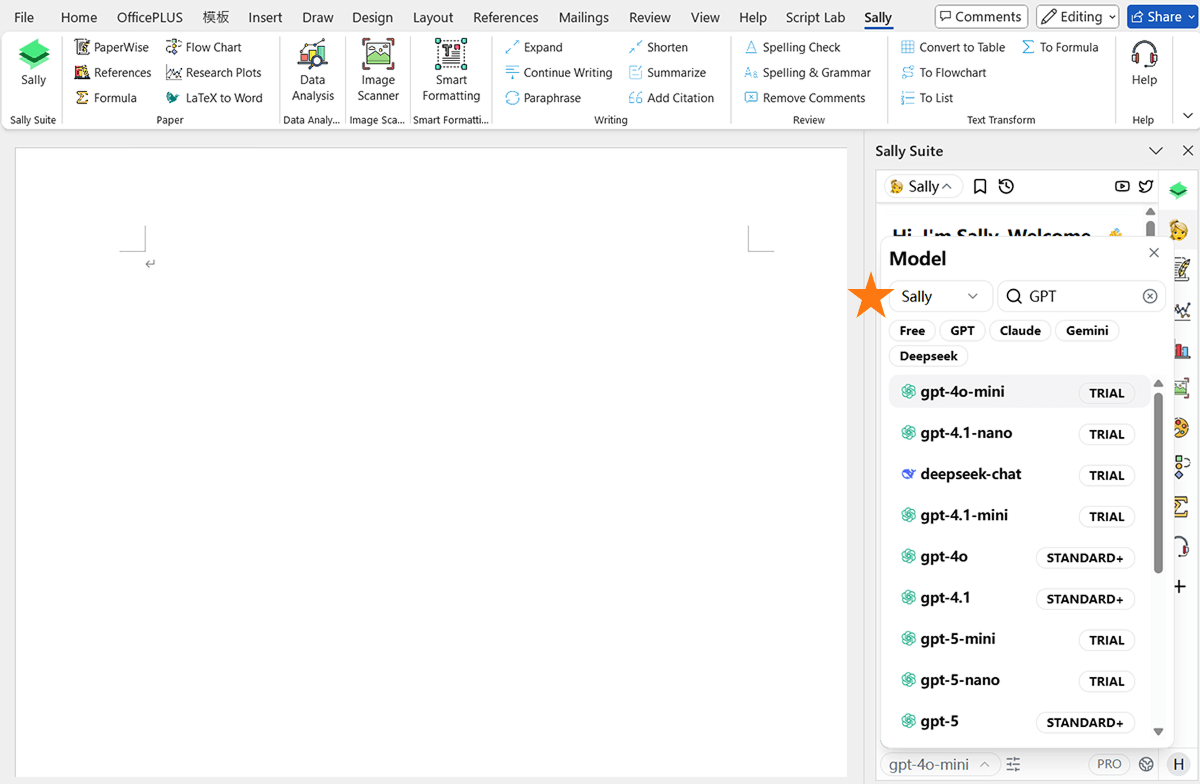

Model Configuration

Sally supports multiple models, and you can configure them in the system,the following models have been tested and can be used in the system. The more stars they have, the better their performance. We also support adding your own models.

- GPT-4o-mini ⭐️⭐️

- GPT-4o ⭐️⭐️⭐️

- Claude 3.5 haiku ⭐️⭐️⭐️

- Claude 3.5 sonnet ⭐️⭐️⭐️⭐️⭐️

- DeepSeek V3 ⭐️⭐️⭐️⭐️

Support AI Model Providers

You can obtain desired models from the following model providers and add them to the system. Priority is given to models from GPT, CLAUDE, and DeepSeek.

| Provider | Status | link |

|---|---|---|

| OpenAI | Done | link (opens in a new tab) |

| OpenRouter | Done | link (opens in a new tab) |

| SiliconFlow | Done | link (opens in a new tab) |

| DeepSeek | Done | link (opens in a new tab) |

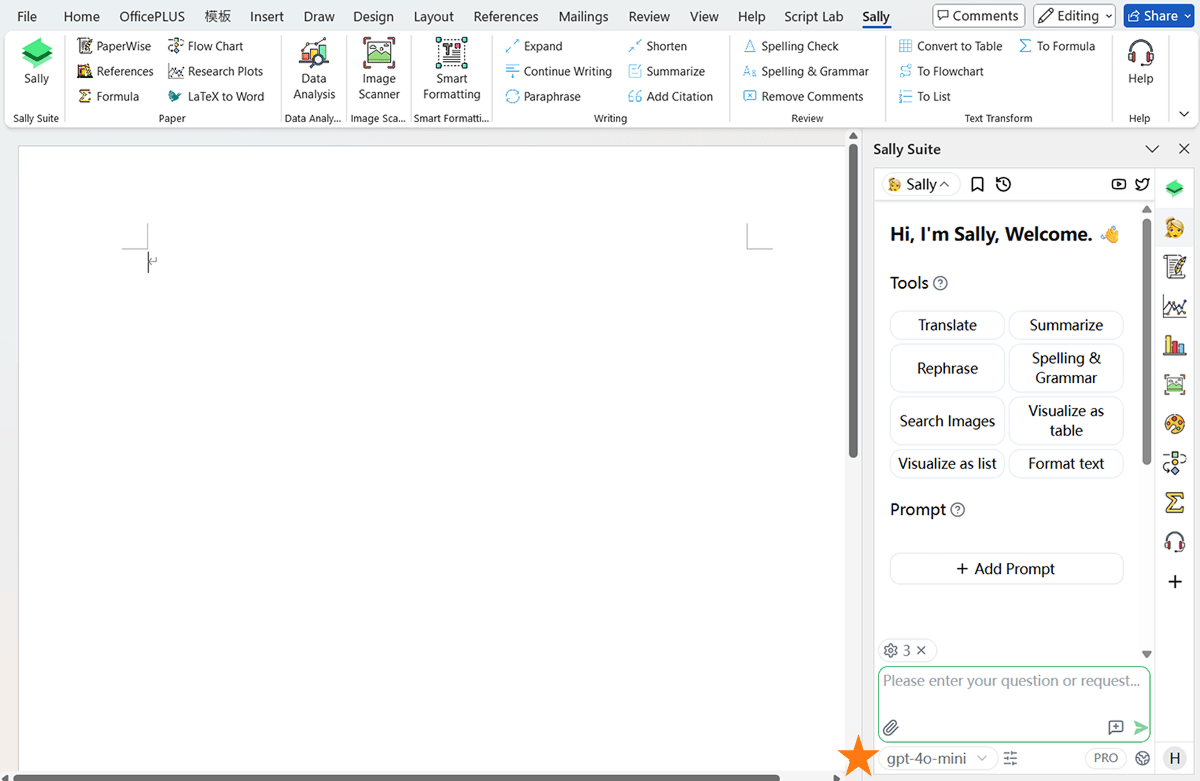

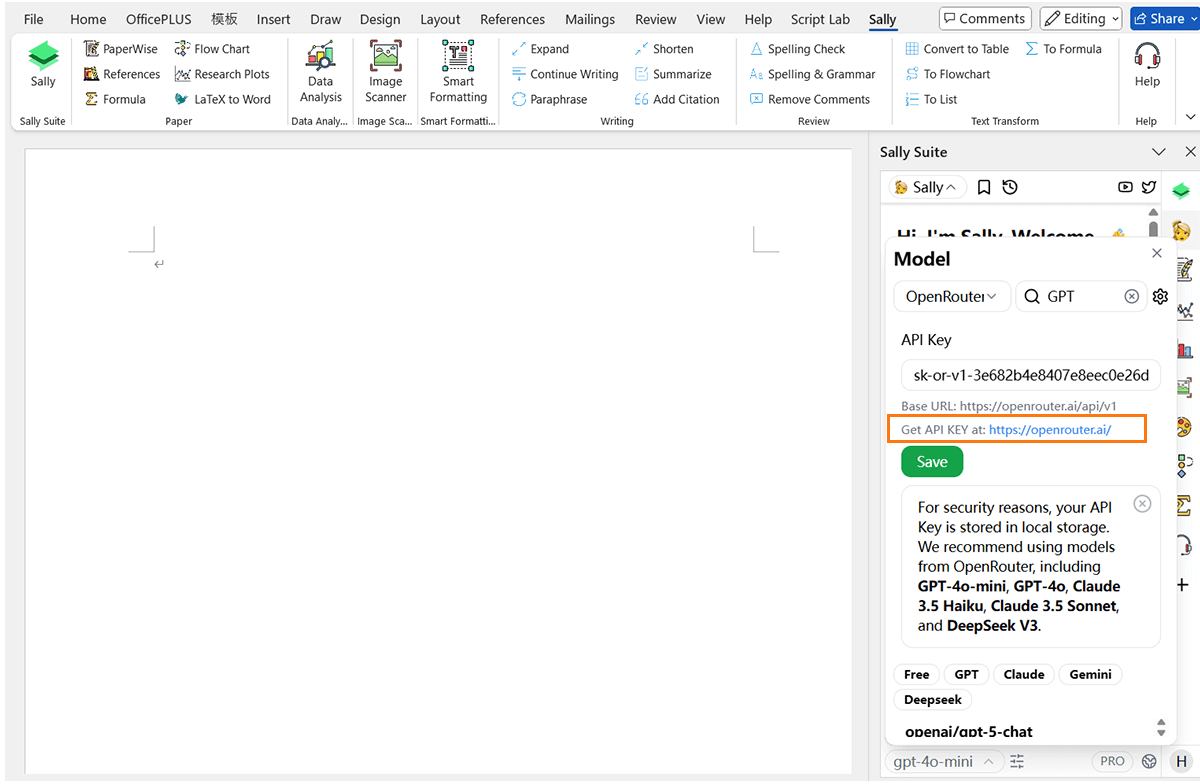

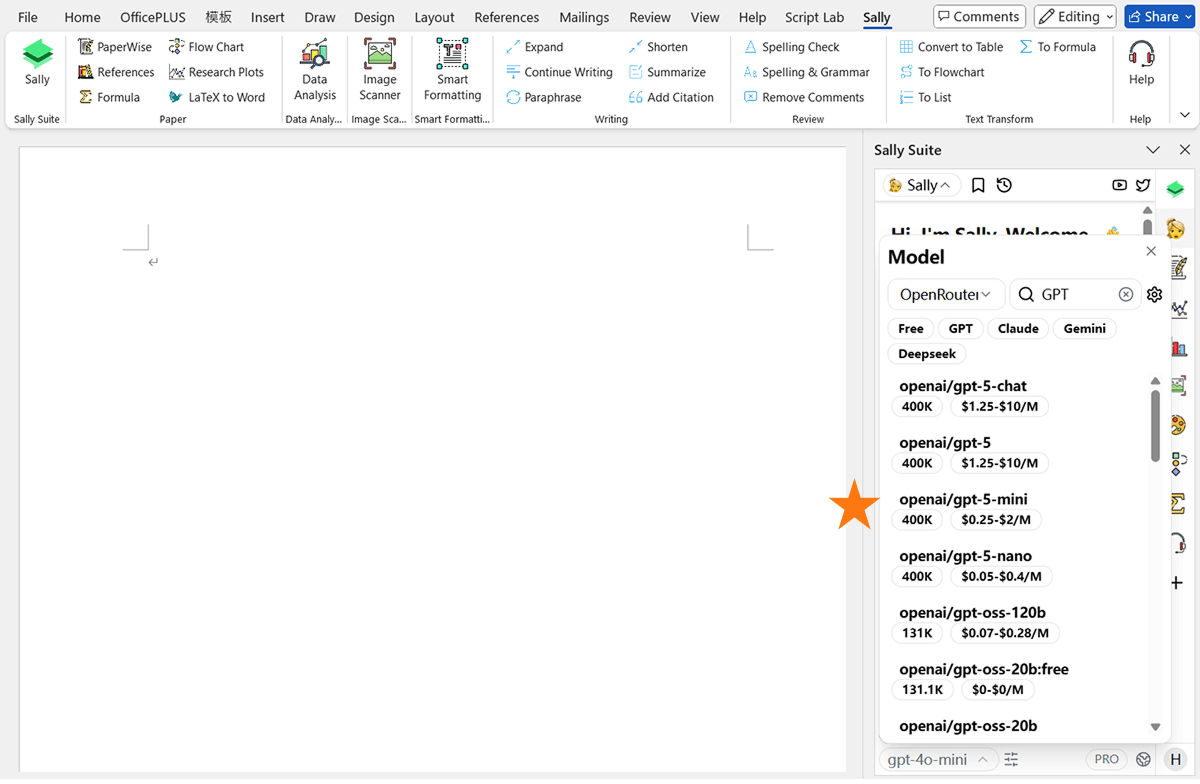

How to add a model

- Open Sally in the side panel.

- Click the model selection panel at the bottom left.

- Choose an AI provider from the dropdown.

- Enter your API key and click "Save." (You’ll need to sign up on the provider’s website to get the key.)

- Pick a model from the list—you can search to find the one you want.

Where the API KEY is stored

The API KEY is stored in the local storage of the browser, so you don't worry about it being leaked.

But you need to configure the API KEY again if you use another software,so you need to configure the API KEY in every software.

We do not Proxy your API requests

If you configure your API KEY in the system, the API requests will be sent directly from your browser to the model provider.

There may be some problems with this, such as CORS problems, if you use AI Provider above, you don't need to worry about this.

Can I use LM Studio or Ollama?

Now, we don't support LM Studio or Ollama, because there may be restrictions to request localhost API in some software, but you can try it yourself.